INFORMATION THEORY AND CODING Questions Bank

PART- B (16 Marks)

UNIT – I

1. (i) How will you calculate channel capacity? (2)

(ii)Write channel coding theorem and channel capacity theorem (5) (iii)Calculate the entropy for the given sample data AAABBBCCD (3) (iv)Prove Shannon information capacity theorem (6)

2. (i)Use differential entropy to compare the randomness of random variables (4) (ii)A four symbol alphabet has following probabilities

Pr(a0) =1/2

Pr(a0) = 1/4

Pr(a0) = 1/8

Pr(a0) = 1/8 and an entropy of 1.75 bits. Find a codebook for this four letter alphabet that satisfies source coding theorem (4)

(iii)Write the entropy for a binary symmetric source (4) (iv)Write down the channel capacity for a binary channel (4)

3. A discrete memory less source has an alphabet of five symbols whose probabilities of occurrence are as described here

Symbols: X1 X2 X3 X4 X5

Probability: 0.2 0.2 0.1 0.1 0.4

Compare the Huffman code for this source .Also calculates the efficiency of the source encoder (8)

(b) A voice grade channel of telephone network has a bandwidth of 3.4 kHz

Calculate

(i) The information capacity of the telephone channel for a signal to noise ratio of 30 dB and

(ii) The min signal to noise ratio required to support information transmission through the telephone channel at the rate of 9.6Kb/s (8)

4. A discrete memory less source has an alphabet of seven symbols whose probabilities of occurrence are as described below

Symbol: s0 s1 s2 s3 s4 s5 s6

Prob : 0.25 0.25 0.0625 0.0625 0.125 0.125 0.125

(i) Compute the Huffman code for this source moving a combined symbols as high as

possible (10)

(ii) Calculate the coding efficiency (4)

(iii) Why the computed source has a efficiency of 100% (2)

5. (i) Consider the following binary sequences 111010011000101110100.Use the Lempel

– Ziv algorithm to encode this sequence. Assume that the binary symbols 1 and 0 are already in the code book (12)

(ii)What are the advantages of Lempel – Ziv encoding algorithm over Huffman coding? (4)

6. A discrete memory less source has an alphabet of five symbols with their probabilities for its output as given here

[X] = [x1 x2 x3 x4 x5 ]

P[X] = [0.45 0.15 0.15 0.10 0.15]

Compute two different Huffman codes for this source .for these two codes .Find

(i) Average code word length

(ii) Variance of the average code word length over the ensemble of source symbols (16)

7. A discrete memory less source X has five symbols x1,x2,x3,x4 and x5 with probabilities p(x1) – 0.4, p(x2) = 0.19, p(x3) = 0.16, p(x4) = 0.15 and p(x5) = 0.1

(i) Construct a Shannon – Fano code for X,and Calculate the efficiency of the code (7)

(ii) Repeat for the Huffman code and Compare the results (9)

8. Consider that two sources S1 and S2 emit message x1, x2, x3 and y1, y2,y3 with joint probability P(X,Y) as shown in the matrix form.

3/40 1/40 1/40

P(X, Y) 1/20 3/20 1/20

1/8 1/8 3/8

Calculate the entropies H(X), H(Y), H(X/Y), and H (Y/X) (16)

9. Apply Huffman coding procedure to following massage ensemble and determine Average length of encoded message also. Determine the coding efficiency. Use coding alphabet D=4.there are 10 symbols.

X = [x1, x2, x3……x10]

P[X] = [0.18,.0.17,0.16,0.15,0.1,0.08,0.05, 0.05,0.04,0.2] (16)

UNIT II

1. (i)Compare and contrast DPCM and ADPCM (6) (ii) Define pitch, period and loudness (6)

(iii) What is decibel? (2)

(iv) What is the purpose of DFT? (2)

2. (i)Explain delta modulation with examples (6)

(ii) Explain sub-band adaptive differential pulse code modulation (6) (iii) What will happen if speech is coded at low bit rates (4)

3. With the block diagram explain DPCM system. Compare DPCM with PCM

& DM systems (16)

4. (i). Explain DM systems with block diagram (8)

(ii) Consider a sine wave of frequency fm and amplitude Am, which is applied to a delta modulator of step size .Show that the slope overload distortion will occur Information Coding Techniques

if Am > / ( 2fmTs)

Where Ts sampling. What is the maximum power that may be transmitted without slope overload distortion? (8)

5. Explain adaptive quantization and prediction with backward estimation in

ADPCM system with block diagram (16)

6. (i) Explain delta modulation systems with block diagrams (8)

(ii) What is slope – overload distortion and granular noise and how it is overcome in adaptive delta modulation (8)

7. What is modulation? Explain how the adaptive delta modulator works with different algorithms? Compare delta modulation with adaptive delta modulation (16)

8. Explain pulse code modulation and differential pulse code modulation (16)

UNIT III

1. Consider a hamming code C which is determined by the parity check matrix

1 1 0 1 1 0 0

H = 1 0 1 1 0 1 0 0

1 1 1 0 0 1

(i) Show that the two vectors C1= (0010011) and C2 = (0001111) are code words of C and Calculate the hamming distance between them (4)

(ii) Assume that a code word C was transmitted and that a vector r = c + e is received. Show that the syndrome S = r. H T only depends on error vector e. (4)

(iii) Calculate the syndromes for all possible error vectors e with Hamming weight <=1 and list them in a table. How can this be used to correct a single bit error in an arbitrary position? (4)

(iii) What is the length and the dimension K of the code? Why can the min Hamming distance dmin not be larger than three? (4)

2. (i) Define linear block codes (2)

(ii)How to find the parity check matrix? (4) (iii)Give the syndrome decoding algorithm (4)

(iv)Design a linear block code with dmin ≥ 3 for some block length n= 2m-1 (6)

3. a. Consider the generator of a (7,4) cyclic code by generator polynomial g(x) – 1+x+x3.Calculate the code word for the message sequence 1001 and Construct systematic generator matrix G. (8)

b. Draw the diagram of encoder and syndrome calculator generated by polynomial g(x)? (8)

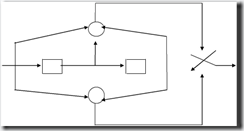

4. For the convolution encoder shown below encode the message sequence

(10011). also prepare the code tree for this encoder (16)

Path 1

+

Msg Bits FF FF Output

+

+Path 1

5. (i)Find a (7,4) cyclic code to encode the message sequence (10111) using generator matrix g(x) = 1+x+x3 (8)

(ii) Calculate the systematic generator matrix to the polynomial g(x) = 1+x+x3.Also draw the encoder diagram (8)

6. Verify whether g(x) = 1+x+x2+x3+x4 is a valid generator polynomial for generating a cyclic code for message [111] (16)

7. A convolution encoder is defined by the following generator polynomials: g0(x) = 1+x+x2+x3+x4

g1(x) = 1+x+x3+x4

g2(x) = 1+x2+x4

(i) What is the constraint length of this code? (4)

(ii) How many states are in the trellis diagram of this code ( 8) (iii) What is the code rate of this code? (4)

8. Construct a convolution encoder for the following specifications: rate efficiency =1/2

Constraint length =4.the connections from the shift to modulo 2 adders are described

by following equations

g1(x) = 1+x g2(x) = x

Determine the output codeword for the input message [1110] (16)

UNIT IV

1. (i)Discuss the various stages in JPEG standard (9)

(ii)Differentiate loss less and lossy compression technique and give one example for each (4)

(iii)State the prefix property of Huffman code (3)

2. Write the following symbols and probabilities of occurrence, encode the

Message “went#” using arithmetic coding algorithms. Compare arithmetic coding with Huffman coding principles (16)

Symbols: e n t w #

Prob : 0.3 0.3 0.2 0.1 0.1

3. (a)Draw the JPEG encoder schematic and explain (10)

(b) Assuming a quantization threshold value of 16, derive the resulting quantization error for each of the following DCT coefficients

127, 72, 64, 56,-56,-64,-72,-128. (6)

4. (i)Explain arithmetic coding with suitable example (12)

(ii) Compare arithmetic coding algorithm with Huffman coding (4).

5. (i)Draw JPEG encoder block diagram and explain each block (14)

(ii) Why DC and AC coefficients are encoded separately in JPEG (2)

6. (a)Discuss in brief ,the principles of compression (12)

(b) in the context of compression for Text ,Image ,audio and Video which of the compression techniques discussed above are suitable and Why? (4)

7. (i)Investigate on the „block preparation „and quantization phases of

JPEG compression process with diagrams wherever necessary (8)

(ii) Elucidate on the GIFF and TIFF image compression formats (8)

UNIT V

1. (i)Explain the principles of perceptual coding (14)

(ii) Why LPC is not suitable to encode music signal? (2)

2. (i)Explain the encoding procedure of I,P and B frames in video encoding with suitable diagrams (14)

(ii) What are the special features of MPEG -4 standards (2)

3. Explain the Linear Predictive Coding (LPC) model of analysis and synthesis of speech signal. State the advantages of coding speech signal at low bit rates (16)

4. Explain the encoding procedure of I,P and B frames in video compression techniques, State intended application of the following video coding standard MPEG -1 , MPEG -2, MPEG -3 , MPEG -4 (16)

5. (i)What are macro blocks and GOBs? (4)

(ii) On what factors does the quantization threshold depend in H.261 standards? (3)

(iii)Discuss the MPEG compression techniques (9)

6. (i)Discuss about the various Dolby audio coders (8)

(ii) Discuss about any two audio coding techniques used in MPEG (8)

7. Discuss in brief, the following audio coders: MPEG audio coders (8)

DOLPY audio coders (8)

8. (i)Explain the „Motion estimation‟ and „Motion Compensation „ phases of P and

B frame encoding process with diagrams wherever necessary (12)

(ii) Write a short note on the „Macro Block „format of H.261

compression standard (4)

0 comments:

Post a Comment